Interested in Joining Us?

Consider becoming a PC member, paper author, speaker, and volunteer!

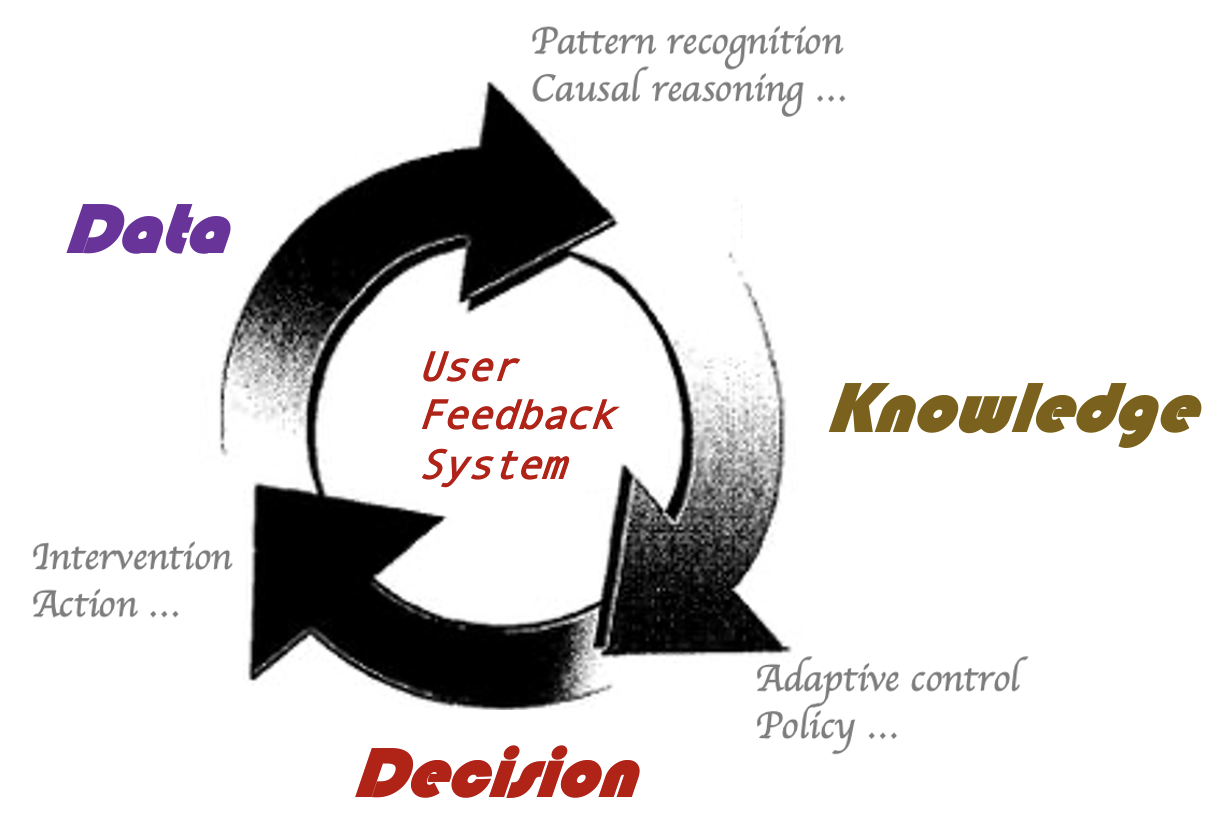

IR and recommender systems differ from other machine learning domains because they are inherently part of an ecosystem -- in the simplest case, a world of items and users. In these ecosystems, system designers face a broad range of decisions -- e.g., how to balance popularity, which incentives should be given to which users, or what safeguards to put in place to ensure the platform thrives in the long-run. There are many complex decision-making challenges faced by real-world IR and recommender systems, but existing approaches often make oversimplified assumptions about the environment, data, and human behavior.

Our workshop aims to unite interested scholars, researchers, practitioners and engineers from various industries and disciplines for a comprehensive discussion of emerging challenges and promising solutions.

The information and logistics of our workshop are listed as follows.

All the accepted submissions will be presented at the workshop, either in oral sessions or the poster session, and will be included in the conference proceedings.

We inivite quality research contributions and application studies in different formats:Workshop papers that have been previously published or are under review for another journal, conference or workshop should not be considered for publication. Workshop papers should not exceed 12 pages in length (maximum 8 pages for the main paper content + maximum 2 pages for appendixes + maximum 2 pages for references). Papers must be submitted in PDF format according to the ACM template published in the ACM guidelines, selecting the generic “sigconf” sample. The PDF files must have all non-standard fonts embedded. Workshop papers must be self-contained and in English. The reviewing process is double-blinded.

At least one author of each accepted workshop paper has to register for the main conference. Workshop attendance is only granted for registered participants.

Download CFP SubmitWe welcome submissions from a broad range of topics.

Recommender systems as the intermediary in the large ecosystem of users, items and creators, plays a critical role in its success in the long run. Industrial recommender systems, suffering from closed feedback loop effect, however tend to exploit popular choices, leaving a large majority of the item corpus and creator base under-discovered. In this talk, I will discuss our work in studying exploration, in particular what we referred to as item exploration that aims at discovering fresh and tail items/creators in recommender systems. The talk will shed light on the challenges in measuring the benefits of exploration, and bring new experiment designs to systematically quantify the value of exploration to the long term user experience and ecosystem health. I will discuss our work in building dedicated exploration stacks in an industrial recommendation platform, as well as making existing recommendation stacks more exploration friendly. I will go over some of the main technology bets we are investing to bring efficient exploration, leading to maximum long-term ecosystem growth with minimum short-term user cost.

RecordingOnline job marketplaces such as Indeed.com, CareerBuilder and LinkedIn Inc. are helping millions of job seekers find their next jobs while thousands of corporations as well as institutions fill their opening positions at the same time. On top of that, the global pandemic COVID-19 in 2020 up till now has profoundly transformed workplaces, creating and driving remote work environments around the world. While this emerging industry generates tremendous growth in the past several years, technological innovations around this industry have yet to come. In this talk, I will discuss how different technologies, such as search systems, recommender systems as well as advertising systems that the industry heavily relies on are deeply rooted in their more generic counterparts, which may not address unique challenges in this industry and therefore hindering possibly better products serving both job seekers and recruiters. In addition, observations and evidence indicate that users, including job seekers, recruiters, as well as advertisers, have different behaviors on these novel two-sided marketplaces, calling for a better systematic understanding of users in this new domain.

Recording

Modern advertising systems are built on a combination of machine learning, distributed systems, statistics, and game theory. Recent work in recommender systems has illuminated the distinction between the prediction paradigm (which underlies much of machine learning) and the decision paradigm which accomodates feedback loops and partial observability. Advertising is further subject to strategic actors and marketplace-level dynamics. Our disciplines lack many of the formal tools to reason about large marketplaces that are intermediated by machine learning models.

In this talk I’ll give an introduction to our advertising domain at Amazon, before moving on to discuss recent work on decision making in ads: learning to bid, and learning to conduct auctions. In both cases we combine machine learning, counterfactual reasoning and optimized decision making with strategyproof-ness and incentive compatibility, highlighting the opportunities for exciting new work at the intersection of multiple disciplines.

RecordingConversational systems are emerging as a ubiquitous tool to help users in a variety of important tasks. Two challenges in conversational systems motivate much of my ongoing research: 1) how to enable high-quality personalization, so that users can have satisfying experiences; and (2) how to combat unfairness and bias that are seemingly inherent in these systems. In this talk, I will present recent work in my lab towards tackling these two challenges. I'll conclude with thoughts on future directions.

Recording

Abstract: Large scale deep learning recommender system models, which are used for predicting top-k items given a query (typically a user identifier), learn the user to item propensities from large amounts of training data containing many (user, item, time) tuples. Unlike the simpler bi-linear models such as Matrix Factorization, deep learning recommender system models almost always do not model users explicitly bur rather model them via the items they have consumed in the past, with or without temporal ordering information. This allows the model to extrapolate the preference matching on many unseen users as long as the items these unseen users have interacted with are seen during training. However, pure item identifier based models are often incapable in assigning faithful propensities to novel items not previously seen during training. This is the classical item cold start problem in recommender system. Item content features (e.g. item description, movie synopsis, product image, etc) become useful in such cold start scenarios as they become proxy for the item identifier. In this paper, we investigate a rather extreme cold start situation wherein the entire application is treated as cold start. Imagine two related businesses whose product inventory is similar in content type but not in identity. Further assume that one of them lacks sufficient user-item interactions limiting us from building a personalization model. Question we want to ask is whether we can obtain domain agnostic information from the data rich domain — source domain, and transfer it to the data poor domain — target domain ?

To this end, in this talk, we present Zero Shot Transfer Learning model that transfers such learned task-agnostic-knowledge from the source to the target domain. We conduct offline experiments on large scale real world datasets and present the results and findings. Time permitting, we will also talk about a novel concept we first introduced back in 2021 — Language Models as Recommender Systems, where we show how we can leverage large pre-trained language models and prompt engineering to generate recommendations in a zero shot setting.

Recording| Time | Title | Speaker |

|---|---|---|

| 9:00 am - 9:10 pm (CDT) | Host Chair | Welcome and Opening Remarks |

| 9:10 am - 9:50 am (CDT) | Keynote | James Caverlee (Texas A&M UNIVERSITY) Topic: Personalization and Fairness in Conversational Systems |

| 9:50 am - 10:00 am (CDT) | Oral presentation | CLIME: Completeness-Constrained LIME Presenter: paper authors |

| 10:00 am - 10:40 am (CDT) | Keynote | Ben Allison (Amazon) Topic: Making Decisions in Sponsored Advertising |

| 10:40 am - 11:00 am (CDT) | Coffee break | Social events |

| 11:00 am - 11:10 am (CDT) | Oral presentation | Investigating Action-Space Generalization in Reinforcement Learning for Recommendation Systems Presenter: paper authors |

| 11:10 am - 11:50 am (CDT) | Keynote | Liangjie Hong (LinkedIn) Topic: Computational Jobs Marketplace |

| 11:50 am - 12:30 pm (CDT) | Poster session | All contributed authors |

| 12:30 pm - 1:30 pm (CDT) | Lunch break | |

| 1:30 pm - 2:10 pm (CDT) | keynote | Anoop Deoras (Amazon) Topic: Zero Shot Recommenders, Large Language Models and Prompt Engineering |

| 2:10 pm - 2:20 pm (CDT) | Oral Presentation | Interleaved Online Testing in Large-Scale Systems Presenter: paper authors |

| 2:20 pm - 3:00 pm (CDT) | Keynote | Minmin Chen (Google Research) Topic: Exploration in Recommender Systems |

| 3:00 pm - 3:30 pm (CDT) | Coffee Break | |

| 3:30 pm - 3:40 pm (CDT) | Oral Presentation | On Modeling Long-Term User Engagement from Stochastic Feedback Presenter: paper authors |

| 3:40 pm - 4:40 pm (CDT) | Panel Discussion |

This year, we received > 20 high-quality submission, 4 papers are selected for the oral presentation, and 7 papers are accepted for the highlight presentation at the poster session. Authors of the accepted papers should followed the emailed instructions to submit the camera-ready version and papre for the presentation at the workshop. Please contact daxu5180 @ gmail.com for any questions.

Interleaved Online Testing in Large-Scale Systems (Link to Presentation)

Author: Nab Bi (Amazon), Bai Li (Amazon), Ruoyuan Gao (Amazon), Graham Edge (Amazon), Sachin Ahuja (Amazon)

Fairness-Aware Differentially Private Collaborative Filtering (Link to Presentation)

Author: Zhenhuan Yang (Etsy), Yingqiang Ge (Rutgers University), Congzhe Su (Etsy), Dingxian Wang (Etsy), Xiaoting Zhao (Etsy), Yiming Ying (University at Albany)

Real-Time Recommendation of Psychotherapy Dialogue Topics (Link to Presentation)

Author: Baihan Lin (Columbia University), Guillermo Cecchi (IBM), Djallel Bouneffouf (IBM)

On Modeling Long-Term User Engagement from Stochastic Feedback (Link to Presentation)

Author: Guoxi Zhang (Graduate School of Informatics, Kyoto University), Xing Yao (China Central Depository & Clearing Co., Ltd.), Xuan-Ji Xiao (Shopee Inc.)

Promoting Inactive Members in Edge-Building Marketplace (Link to Presentation)

Author: Ayan Acharya (LinkedIn), Siyuan Gao (LinkedIn), Borja Ocejo (LinkedIn), Kinjal Basu (LinkedIn), Ankan Saha (LinkedIn), Keerthi Selvaraj (LinkedIn), Rahul Mazumdar (LinkedIn), Parag Agrawal (LinkedIn), Aman Gupta (LinkedIn)

CLIME: Completeness-Constrained LIME (Link to Presentation)

Author: Claudia Roberts (Princeton University), Ehtsham Elahi (Netflix), Ashok Chandrashekar (WarnerMedia)

Robust Stochastic Multi-Armed Bandits with Historical Data (Link to Presentation)

Author: Sarah Boufelja Yacoubi (Imperial College London), Djallel Bouneffouf (IBM Research), Sergiy Zhuk (IBM Research)

Investigating Action-Space Generalization in Reinforcement Learning for Recommendation Systems (Link to Presentation)

Author: Abhishek Naik (University of Alberta), Bo Chang (Google Research), Alexandros Karatzoglou (Google Research), Martin Mladenov (Google Research), Ed H. Chi (Google Research), Minmin Chen (Google Research)

DeepPvisit: A Deep Survival Model for Notification Management (Link to Presentation)

Author: Guangyu Yang (LinkedIn), Efrem Ghebreab (LinkedIn), Jiaxi Xu (LinkedIn), Xianen Qiu (LinkedIn), Yiping Yuan (LinkedIn), Wensheng Sun (LinkedIn)

Skill Graph Construction From Semantic Understanding (Link to Presentation)

Author: Shiyong Lin (LinkedIn), Yiping Yuan (LinkedIn), Carol Jin (LinkedIn), Yi Pan (LinkedIn)

Dynamic Embedding-based Retrieval for Personalized Item Recommendations at Instacart(Link to Presentation)

Author: Chuanwei Ruan (Instacart)

Our organizers and committee members are dedicated to provide first-class workshop experience for our contributors and attendants.

Da is a Staff AI Engineer at LinkedIn.

Tobias Schnabel is a senior researcher in the Productivity+Intelligence group at Microsoft Research.

Xiquan a Senior Manager of Data Science at homedepot.com.

Sarah is an Associate Professor with the CS Department of Cornell University.

Jianpeng is a Data Science Manager with the Personalization team at Walmart Labs.

Aniket is an Applied Scientist at Amazon.

Bo is an Applied Scientist at Amazon Ads.

Shipeng is the Director of AI Engineering at LinkedIn.

We aim to deliver more impact to the community year-by-year. Last year's statistics and our growth goal:

Consider becoming a PC member, paper author, speaker, and volunteer!